I run a four-node Nutanix Community Edition cluster at home, on a batch of old HP DL360s scavenged from the office when they were throwing things out some years ago. It’s generally reliable; occasionally there’s a network glitch that takes out a bunch of VMs, but most of the time it stays up, happy and hale.

Hardware failures happen, however, particularly to disks. The cluster has 28 disks, so it’s inevitable that they wear out, particularly since my dusty basement is hardly a pristine data center environment. The cluster is also reliant on SSDs, where most of the I/O is directed from the VMs, and which also host the Controller VMs, so on occasion the SSDs wear out and fail. It’s a huge hassle to repair those, because it essentially requires you to reinstall the CVM and hope that the cluster recognizes and readmits it.

It’s doubly hard if the “install” user has disappeared, which apparently happens during the cleanup process that occurs after a cluster software upgrade. I had installed the cluster using a 2017 image, and then upgraded it in mid-2019; when I went back to try and repair a failed SSD, none of the install scripts were there, and even the install user had been removed from all four hosts, making it impossible to rebuild the CVM on the new drive.

To get around this, I went and found the full install image (which normally you stick on a USB or SD card to use as a boot disk), which contained the necessary files, but figuring out how to mount it and get the files off without actually burning a USB stick was a bit of a trial.

Mounting on Windows proved nearly impossible, so I copied the file to where one of my Linux VMs could get at it. The issue is that the disk image is partitioned, just like a regular physical or virtual disk would be, but of course the operating system doesn’t recognize a file as a disk device. Luckily, the “mount” command has an “offset” feature that allows you to tell it where to start looking for an actual filesystem. You can figure out that offset value using fdisk:

[root@mybox Nutanix]# fdisk -l ce-2019.02.11-stable.img

Disk ce-2019.02.11-stable.img: 7444 MB, 7444889600 bytes, 14540800 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x000d3ba3

Device Boot Start End Blocks Id System

ce-2019.02.11-stable.img1 * 2048 14540799 7269376 83 LinuxIt even sticks a “1” on the end of the image name, just like a disk partition, though if you tried “mount ce-2019.02.11-stable.img1 /mnt” it would have no idea what you were talking about.

To figure out the offset number, note that the partition starts at sector 2048, and each sector is 512 bytes; 2048 * 512 = 1048576, so that’s the offset. You can then mount it read-only:

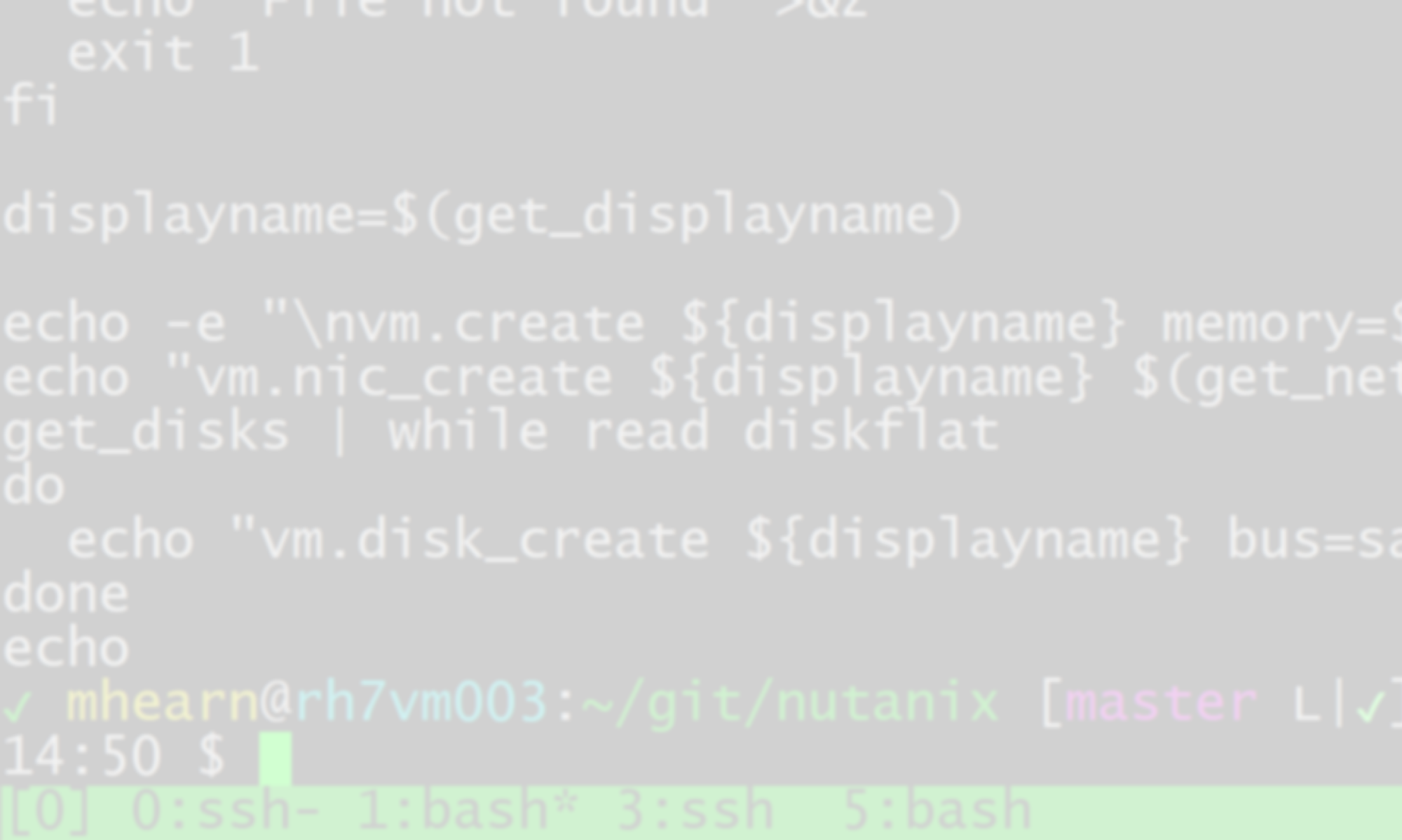

mount -o ro,loop,offset=1048576 ce-2019.02.11-stable.img /mnt/loopAfter that I just used rsync to copy the data over to the affected host, recreated the install user and group (both using uid/gid 1000), and was able to rebuild my CVM. Unfortunately I ran ~install/cleanup.sh first, which seemed to wipe all the configuration from the host, and made the cluster forget all about it, after which I was unable to add the host back in, possibly because of the failing SD card on another host…my cluster is a bit FUBAR at the moment. But at least I got the disk replaced!