After taking a particularly good course on Kubernetes hosted by the Linux Foundation, I decided I wanted to finally get a cluster up and running in my home lab. I’d managed to build a 3-node cluster (one master and 2 “worker” nodes), but was eager to see if I could set up a “highly available” cluster with multiple masters.

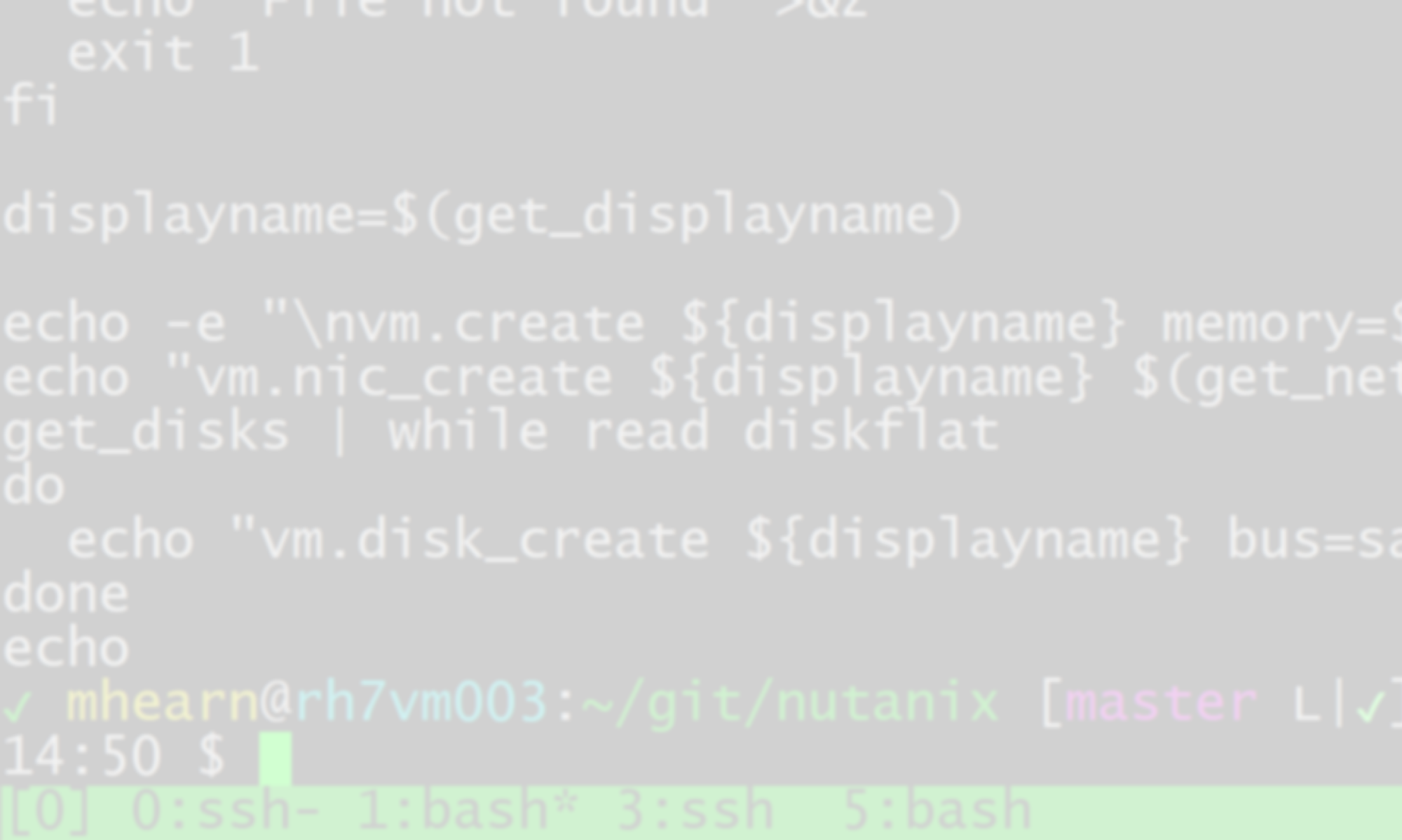

In my home lab, I have a Nutanix “Community Edition” cluster, and I can use Terraform and Ansible to quickly spin up new VMs. I found a decent document on kubernetes.io that explains the process of setting up multiple masters, though it’s not entirely complete, as we’ll discover.

The first step was to spin up the master nodes, which I named (oh-so-imaginatively) kubemaster01, kubemaster02, and kubemaster03, all of them Ubuntu 16.04.05. The only reason I chose Ubuntu was it was what we had used in the training course, and I knew it would work. I may try and add some Centos 7 nodes to the cluster somewhere down the line, just to see how well they work.

For my purposes, I don’t believe the masters have to be very big, just 2 cores and 2GB of memory, along with the default 20GB root disk; I can always expand them later. The nodes are named kubenode01, kubenode02, and kubenode03, with the same specs; because they do most of the “work” of the cluster, I’ll need to keep a close eye on their resource usage and increase as necessary, particularly if I find I need to build persistent volumes for the applications.

Because I put them all in their own special VLAN, I don’t really need to worry about firewalls; they do run iptables, but Kubernetes seems to be pretty good about self-managing the rules that it needs, or at least, I haven’t had to do any manual configuration.

All the nodes need to have docker, kubeadm, and the kubelet installed, plus kubectl to do any managing of the running cluster, though in theory you could get away with installing kubectl on just the masters. They also need to have swap disabled. I wrote an ansible role that handles all of that for me. I hard-coded the version of the kubernetes tools to version 1.13.1-00, which is what we used in the course and is pretty stable, despite having come out only a few months ago.

To simplify setup, I enabled root ssh access on all the masters, and set up a passphrase-protected key for the root user so I could copy files around as needed.

Once the preliminary steps were out of the way, I found I was going to need a load-balancing proxy. This is the first place where the doc from kubernetes.io falls short (at least, as of February 2019); it says “HAProxy can be used as a load balancer,” but offers no instructions on how to set it up, and while HAProxy documentation abounds, it doesn’t offer any advice on setting it up as a Kubernetes API front-end. It took a lot of trial and error to get it working, but I finally got it to do what I wanted.

The versions of HAProxy that are available to download and compile are several minor versions ahead of what’s available for download from apt or yum, but I figured an older version would probably suffice. To make it be as recent as possible, I built myself an Ubuntu 16.04 VM (named haproxy01), and then upgraded it to Ubuntu 18.04.02. (I haven’t gotten around to making a packer build for Ubuntu 18 yet.) Then I used apt to install HAProxy 1.8.8, which isn’t much older than the current “stable” release at 1.9.4. The latest release that Ubuntu 16 has is 1.6.3, and CentOS 7 is only up to 1.5.18.

apt update && apt install haproxy

Because the Kubernetes API server pod listens on port 6443 by default, I needed to be able to forward secure connections on that port to all 3 masters. After a lot of cursing and gnashing of teeth I got /etc/haproxy/haproxy.cfg to look like this:

global log /dev/log local0 log /dev/log local1 notice chroot /var/lib/haproxy stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners stats timeout 30s user haproxy group haproxy daemon ca-base /etc/ssl/certs crt-base /etc/ssl/private ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS ssl-default-bind-options no-sslv3 defaults mode tcp log global option tcplog balance leastconn option dontlognull option redispatch option contstats option socket-stats timeout server 600s timeout client 600s timeout connect 5s timeout queue 60s retries 3 default-server inter 15s rise 2 fall 2 backlog 10000 frontend k8s_api_front bind *:6443 name https tcp-ut 30s default_backend k8s_api_back backend k8s_api_back option tcp-check tcp-check connect port 6443 ssl server kubemaster01.hearn.local <ip address>:6443 check verify none server kubemaster02.hearn.local <ip address>:6443 check verify none server kubemaster03.hearn.local <ip address>:6443 check verify none

I will be the first to admit that I have no idea what most of the settings in defaults and global do; many of them are haproxy.cfg defaults. The real work is happening in the “frontend” and “backend” sections. An HAProxy server can have multiples of each, pointing traffic in various directions. The “frontend k8s_api_front” section tells HAProxy to listen on port 6443 for secure connections, and then forward them to backend k8s_api_back, which distributes connections to the 3 master servers that are all listening on the same port. (I’ve removed my IP addresses, but they should match the IP addresses of the master nodes.)

I also updated my DNS so that I had a CNAME named “k8sapipxy” that pointed to haproxy01. I’ll be using that DNS record to build all the masters and nodes.

Once I got HAProxy working, I was able to set up the first master node, kubemaster01. I modified the kubeadm-config.yaml file a bit from the one provided in the kubernetes.io doc:

apiVersion: kubeadm.k8s.io/v1beta1 kind: ClusterConfiguration kubernetesVersion: 1.13.1 networking: dnsDomain: cluster.local podSubnet: 192.168.0.0/16 serviceSubnet: 10.96.0.0/12 apiServer: certSANs: - "k8sapipxy.hearn.local" controlPlaneEndpoint: "k8sapipxy.hearn.local:6443"

Then I could simply run, on kubemaster01:

kubeadm init --config kubeadm-config.yaml

Until I got the HAProxy set up properly, I ran into a lot of headaches where kubeadm couldn’t seem to communicate through HAProxy to reach the apiserver pod it had set up, but once HAProxy was working as it should, the command worked fine. It will also display the necessary command to run on other nodes to add them to the cluster, including the other master servers (with an extra option that identifies them as masters instead of just worker nodes); it should look something like this:

kubeadm join --token uygjg4.44wh6oioigcstoue \ k8sapipxy.hearn.local:6443 --discovery-token-ca-cert-hash \ sha256:e4b834054fc6fb4c063fc404cd5d7779fc1c253e5d1022def2dd5886fb553f6c

Save that command somewhere, you’ll need it to join additional masters and nodes. The token is only good for 24 hours, but you can generate a new token with the following command:

kubeadm token create

And if you find you lost the CA cert hash, you can get it with the following rather lengthy command:

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //g'

The doc specifies using the Weave plugin for networking, but I was more familiar with Calico, which we had used in the class, so I downloaded the same Calico and RBAC configuration files we’d used in the class:

https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/kubernetes-datastore/calico-networking/1.7/calico.yaml

https://docs.projectcalico.org/v3.3/getting-started/kubernetes/installation/hosted/rbac-kdd.yaml

These get applied using kubectl on kubemaster01:

kubectl apply -f rbac-kdd.yaml kubectl apply -f calico.yaml

Once that’s done, you should be able to use kubectl to monitor the progress of the pod creation on your master:

kubectl -n kube-system get pods

The “-n kube-system” is necessary because the kube-system namespace is usually hidden during a regular pod display. It should only take a couple of minutes, and you should have a fully functioning K8s master! To add the other masters as actual masters instead of worker nodes, you’ll need to copy over all the relevant PKI keys from your first master to the others, along with the main K8s configuration file:

for host in kubemaster02 kubemaster03; do

scp /etc/kubernetes/pki/ca.crt $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/ca.key $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.key $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/sa.pub $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.crt $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/front-proxy-ca.key $host:/etc/kubernetes/pki/

scp /etc/kubernetes/pki/etcd/ca.crt $host:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key $host:/etc/kubernetes/pki/etcd/ca.key

scp /etc/kubernetes/admin.conf $host:/etc/kubernetes

done

You do not need to do that step for the worker nodes, only for the masters. Once you’ve got the keys in place, you can run the deploy command you generated earlier, but with an extra option at the end:

kubeadm join --token uygjg4.44wh6oioigcstoue \ k8sapipxy.hearn.local:6443 --discovery-token-ca-cert-hash \ sha256:e4b834054fc6fb4c063fc404cd5d7779fc1c253e5c1022dff23d9886ab553f6c --experimental-control-plane

The “experimental-control-plane” option indicates that this is an additional master instead of a worker, and it should spin up its own master pods, such as the apiserver and etcd instance. You can monitor the progress of pod creation from your first master:

kubectl -n kube-system get pods

Once all the pods report that they’re up and running, you can run the kubeadm join again on the 3rd master. That should be all that’s necessary; Kubernetes will create all the necessary pods on all the masters, including the apiservers, etcd instances, coredns pods, and all the pods needed for calico. It should look vaguely like this:

NAME READY STATUS RESTARTS AGE calico-node-4649j 2/2 Running 0 2d19h calico-node-8qv9z 2/2 Running 0 2d19h calico-node-h49vp 2/2 Running 0 2d19h calico-node-p9vbm 2/2 Running 0 2d19h calico-node-q5ctt 2/2 Running 0 2d19h calico-node-spfs7 2/2 Running 0 2d19h coredns-86c58d9df4-2ckdt 1/1 Running 0 2d19h coredns-86c58d9df4-tdzbt 1/1 Running 0 2d19h etcd-kubemaster01.hearn.local 1/1 Running 0 2d19h etcd-kubemaster02.hearn.local 1/1 Running 0 2d19h etcd-kubemaster03.hearn.local 1/1 Running 0 2d19h kube-apiserver-kubemaster01.hearn.local 1/1 Running 0 2d19h kube-apiserver-kubemaster02.hearn.local 1/1 Running 0 2d19h kube-apiserver-kubemaster03.hearn.local 1/1 Running 1 2d19h kube-controller-manager-kubemaster01.hearn.local 1/1 Running 4 2d19h kube-controller-manager-kubemaster02.hearn.local 1/1 Running 5 2d19h kube-controller-manager-kubemaster03.hearn.local 1/1 Running 2 2d19h kube-proxy-44wv7 1/1 Running 0 2d19h kube-proxy-66xj4 1/1 Running 0 2d19h kube-proxy-f28zd 1/1 Running 0 2d19h kube-proxy-kz8bs 1/1 Running 0 2d19h kube-proxy-tzf4v 1/1 Running 0 2d19h kube-proxy-zm74m 1/1 Running 0 2d19h kube-scheduler-kubemaster01.hearn.local 1/1 Running 5 2d19h kube-scheduler-kubemaster02.hearn.local 1/1 Running 4 2d19h kube-scheduler-kubemaster03.hearn.local 1/1 Running 3 2d19h

Once all 3 masters are online, you can go to each worker node and run the kubeadm join command, but without the experimental-control-plane option:

kubeadm join --token uygjg4.44wh6oioigcstoue \ k8sapipxy.hearn.local:6443 --discovery-token-ca-cert-hash \ sha256:e4b834054fc6fb4c063fc404cd5d7779fc1c253e5c1022dff23d9886ab553f6c

Each node should happily join the cluster and be ready to host pods! Now I just have to figure out what to do with it…